@inproceedings{xu2021accelerated,

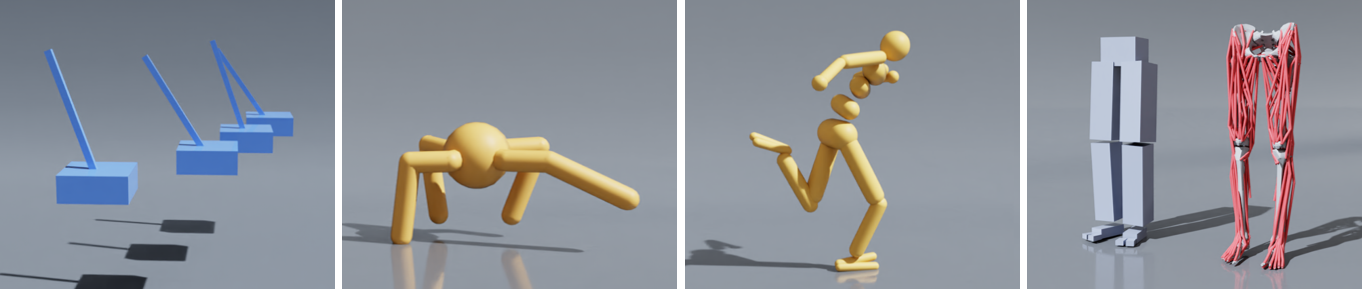

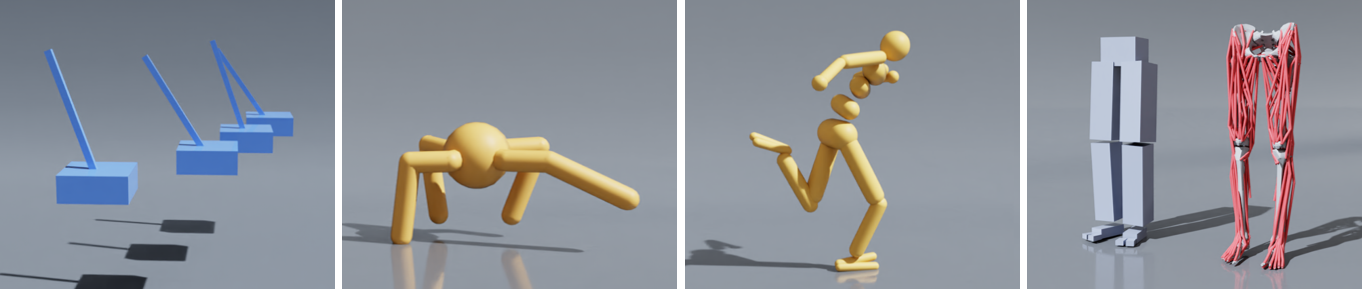

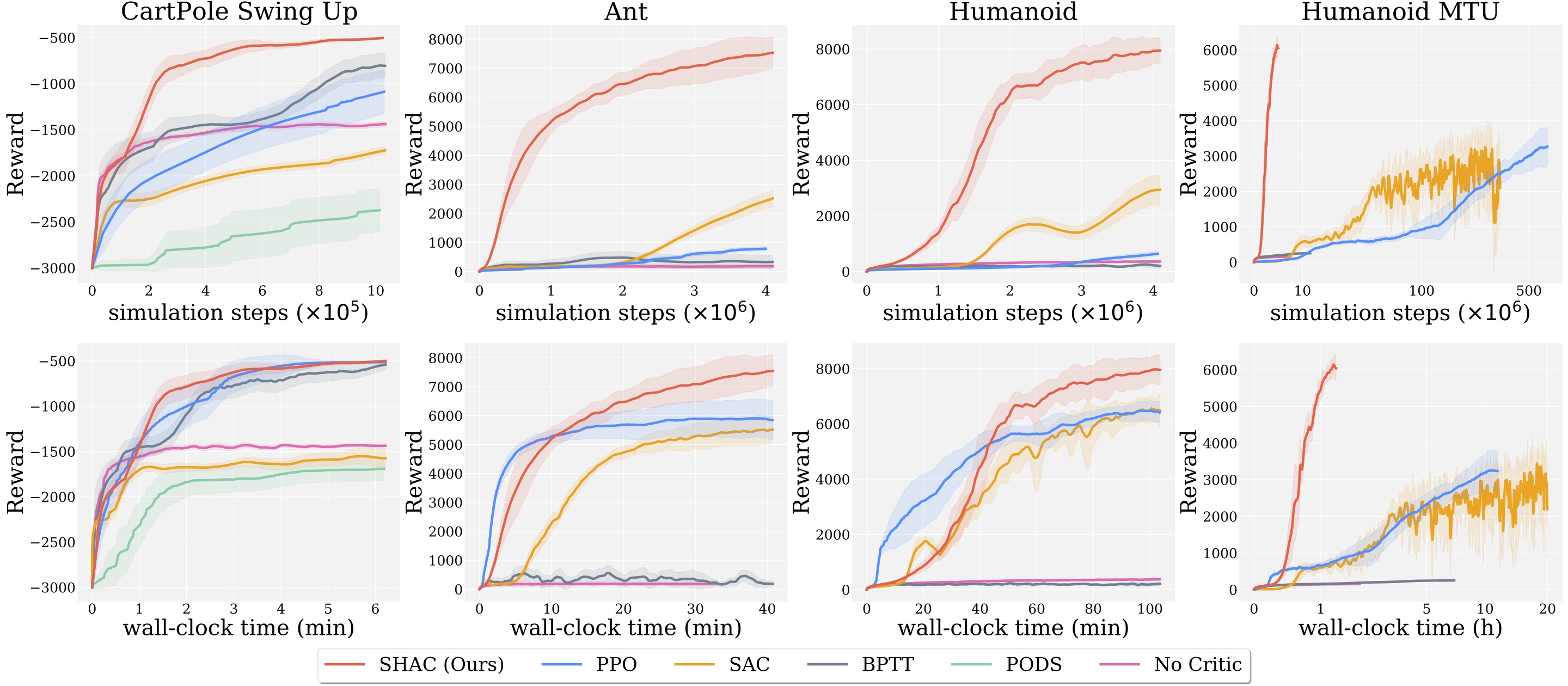

title={Accelerated Policy Learning with Parallel Differentiable Simulation},

author={Xu, Jie and Makoviychuk, Viktor and Narang, Yashraj and Ramos, Fabio and Matusik, Wojciech and Garg, Animesh and Macklin, Miles},

booktitle={International Conference on Learning Representations},

year={2021}

}